Convex optimization

We will give with this lecture an introduction to basics of convex optimization theory in infinite dimensional spaces, where we are mainly interested in non-smooth minimization problems (i.e. no classical derivatives excist). Such proplems arise in many modern applications such as imaging problems or machine learnign applications.

In particular, the following properties are covered:

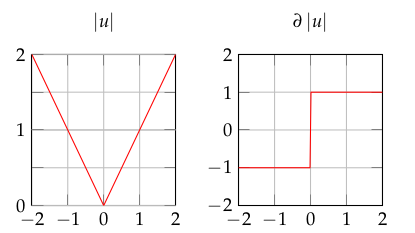

- Convex funtions and subderivates

- Constrained miminimzation problems

- Convex conjugates

- Proximal maps

- Primal and dual problem formulation

- Minimization schemes, in particular splitting approaches

Note that is non mandaroy that you have heared any other course about optimization. However, it is an advantage to be familar with infinite dimensional spaces (Hilbert and Banach spaces, opterator norm, convergence etc).

Literature:

- V. Barbu and Th. Precupanu, Convexity and Optimization in Banach Spaces

- I. Ekeland and R. Teman, Convex Analysis and Variational Problems

- H. Bauschke and P. Combettes, Convex analysis and Monotone Operator Theory in Hilbert Spaces

- J. Peypouquet, Convex Optimization in Normed Spaces: Theory, Methods and Examples

- M. Hinze, R. Pinnau, M. Ulbrich, S. Ulbrich, Optimization with PDE Constraints (only used for Descent methods)

Other useful material:

- Convex analysis Script of Prof. G. Wanka

- Convex optimization Script of Prof. J. Peypouquet